How It Begins

Greetings, Internet wanderer, and welcome to my not-so-very-first blog post. Join me on my adventure where I train Artificial Intelligence on powerful cloud machines using frameworks that were entirely new to me. Let’s start from the beginning, as usual.

The Beginning

A few months ago, I decided to leave my job after a decade of relentless work as a mobile developer. During my time off, I nearly finished ‘Zelda: Tears of the Kingdom’ and invested what could only be described as an astronaut’s worth of training time in building my factory in ‘Factorio’.

That was a wonderful time and I regret only one thing: in the outside world, an AI revolution was spinning, and I forgot to buy tickets for this carousel ride! That was the moment when I realized that its game over and I should code once again.

This cat thinks what I’m thinking

Since the end of 2022, I have been fascinated by generative AI models, such as ChatGPT and Midjourney, and since I was a little kid I have loved video games. I even created some mobile games at the beginning of my developer career! But pretty quickly, my boss noticed that indie games and revenue don’t always come along, so we started building professional software that could generate a reasonable income.

Without job, I am in my own boat, I am the steer, fisherman and the fish and I set the course for combining AI and Game Dev. I planned to “hire” three robot minions: one for writing code, a second for generating visual content, and a third for making audio effects. Basically, I wanted to use bots to automate game development, just like I automated my factory in Factorio!

‘Old grandpa telling kids story about hiring three Artificial Intelligence models: developer, graphic designer and sound maker. Banksy’ DALL-E 3

When I was picking technologies I wanted to choose something within reach of my prior experience, so once more, mobile platforms, but this time with Flutter and the Flame 2D game toolkit since Xamarin wasn’t sparking joy anymore.

Xamarin does not spark joy anymore.

While the game engine is relatively straightforward, I initially struggled to adapt to the aesthetics of the Dart programming language. However, within a few afternoons, I had created playable characters, animated movements, and designed working levels. If you are hungry then I recommend tasting the spaghetti I cooked up for this project. Dish is served in this github repository.

All things considered, having spent more than half a decade working with Xamarin, any tool that ‘just works’ felt amazing! And hey, my bots will write all that difficult code for me, after all, I’m a lazy bastard!

Behold my ‘Lazy Bastard’ achievement in Factorio, my virtual diploma in efficient laziness.

With my tech stack decided, it was time to assemble my team of bots.

To make it happen, I put on my HR suit and started looking around for artificial employees who would be happy to work for the common good for free. For the developer role, I discovered the Code LLama Instruct 34B model, who showed off working Dart code during the recruitment process. Needless to say, it was hired on the spot!

Next came the Graphic Designer; after a bit of research, I chose Stable Diffusion XL base with Refiner models. I just had to follow the tutorial from the Model Card tab and I could generate any high-quality graphics that I could ever imagine.

I was pleased because my workstation (MacBook M1 Pro, 32GB RAM) could handle both new employees. Perhaps they couldn’t work simultaneously, but surely they were slow.

“Oil painting depicting two bots: Bot named “Code LLama” that writes new code on whiteboard. Bot named “Pepe the sad frog” that draws dinosaurs on the canvas.” DALL-E 3

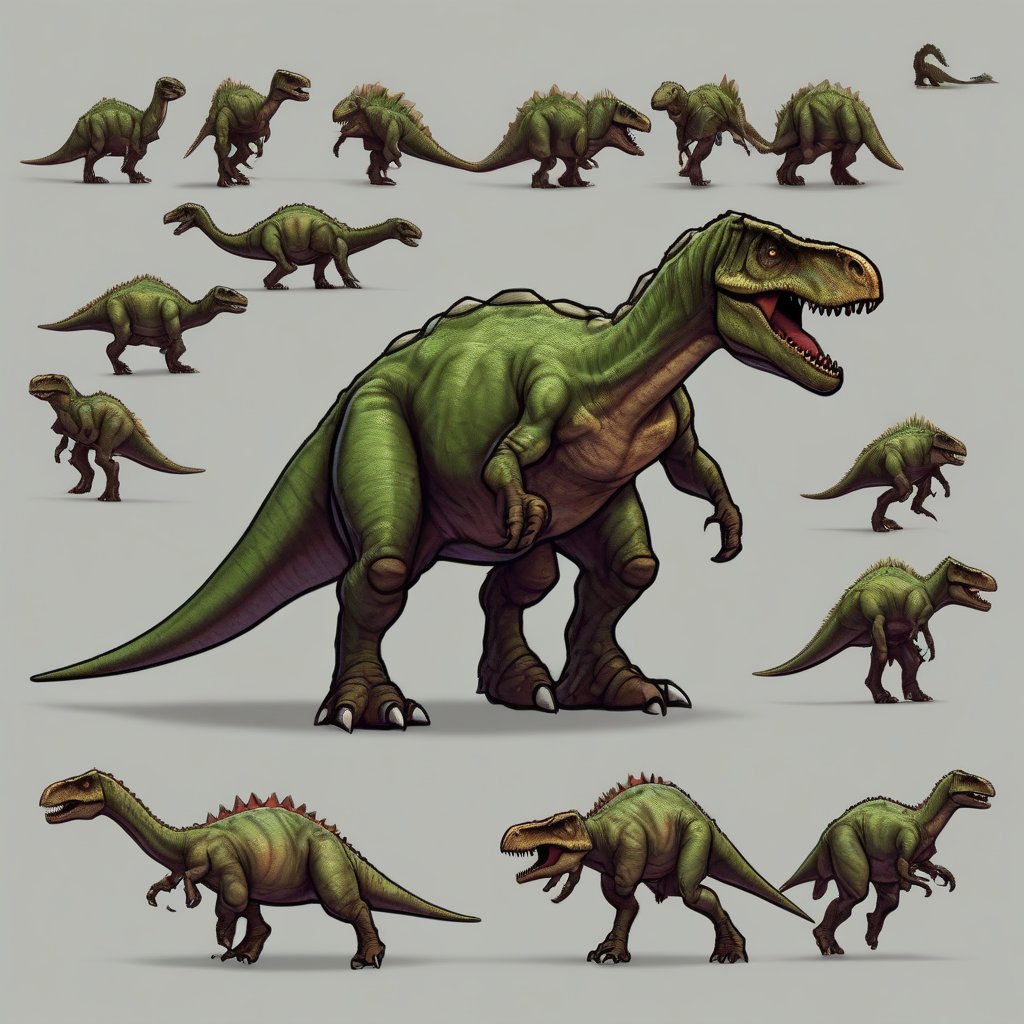

Speed wasn’t my biggest problem, though. I quickly encountered an obstacle that I couldn’t easily overcome: my artificial Graphic Designer wasn’t into animations. Generative models like Stable Diffusion could give me a single image, but generating a sequence of images, like the animation of a walking man or a jumping dinosaur, was beyond its capabilities…

The Problem

Stable Diffusion XL (SDXL) can generate whatever I imagine, yet it’s limited to a single image. But what if I instruct the model to create a sequence of similar-looking, smaller animation frames within this one image? I could formulate the prompt like this:

‘Animated sprite of a jumping dinosaur composed of 6 equal-sized frames’

The results from this prompt:

This is what we’ll get from SDXL. The exact prompt for this image, sadly, has been lost to the sands of time.

This doesn’t look good; the model struggles to generate consistent frames for animation. I was aware that I could adjust an already trained ML model to better suit my needs, and, after a short talk with Uncle Google, I found a tutorial that guided me through the process of fine-tuning the SDXL model with a Hugging Face 🤗 training script called train_text_to_image_lora_sdxl.py

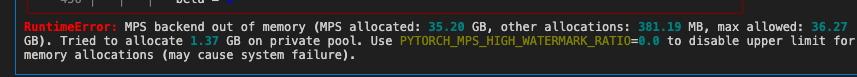

In theory, fine-tuning is the training of a neural network by slightly adjusting its existing weights to better suit our needs. In reality, I could just run the script and call it a day. However, my laptop quickly objected, as the training was very memory hungry - it quickly exceeded my 32GB available RAM.

My MacBook’s 32GB of unified memory was quickly exceeded during the training process. That’s a byte-sized challenge!

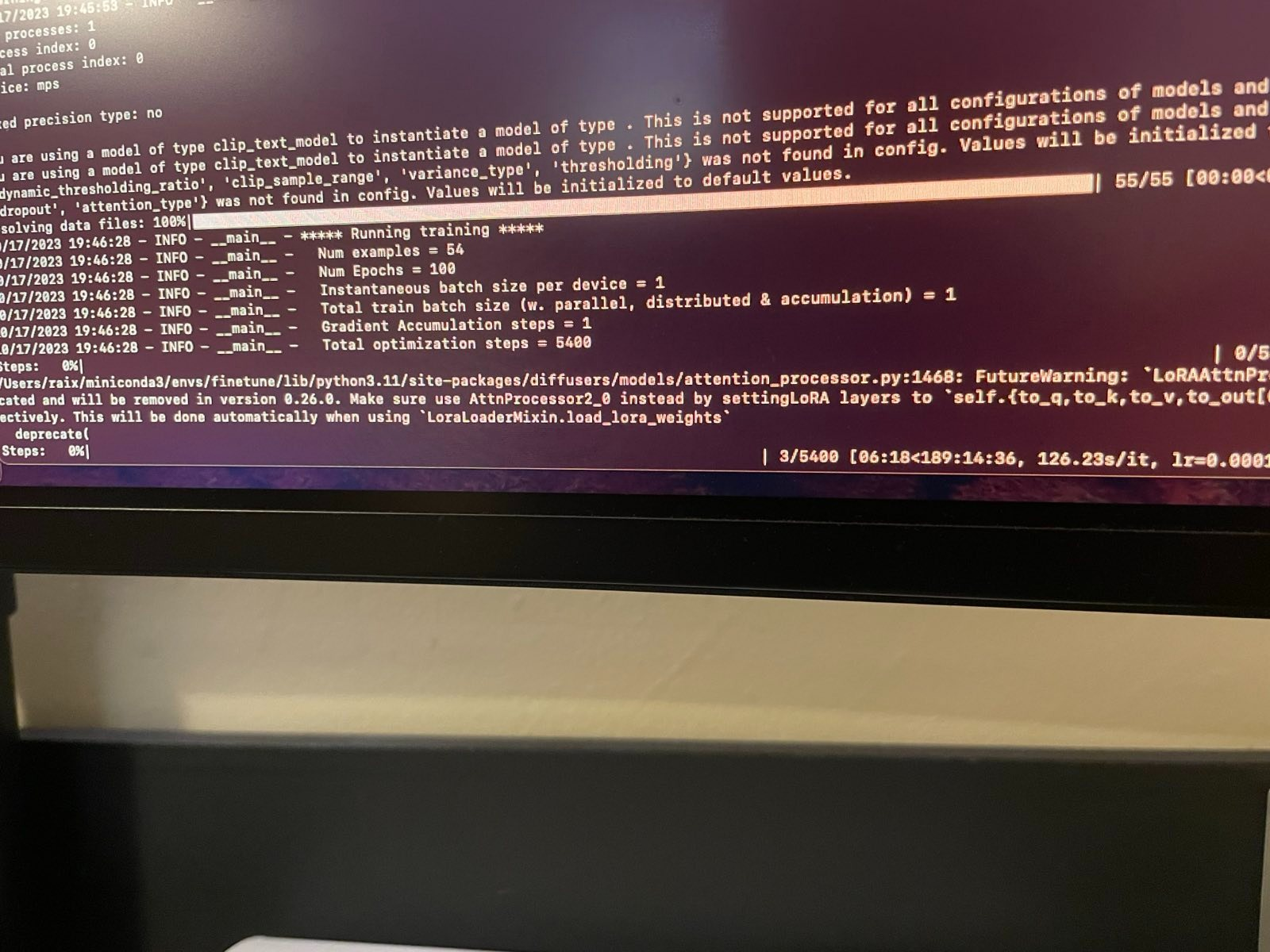

After negotiating with macOS, I managed to ‘borrow’ some memory from the SSD - or at least that’s what I think happened, because the Activity Monitor showed that 60GB of memory was in use on my 32GB machine. After a few system crashes, I finally started a training session and discovered that it would take only 189 hours. That’s OK with me, but I don’t think that my system or my SSD would survive such a hardcore workout.

A screenshot of the training output, taken with my phone, as the system was mostly unresponsive.

The Solution aka More Brains

A wise article: “The Bitter Lesson”, teaches us that with great computing power comes great Machine Learning capability. Happily, the Google TPU Research Cloud exists. I applied, and a few days later, they responded with exciting news: for the next 30 days, I would have access to:

- 5 on-demand TPU v2-8 devices

- 5 on-demand TPU v3-8 devices

- 100 preemptible TPU v2-8 devices

That’s neat, but way too much for my needs! They gave me an entire army when I just wanted a single tank!

I focused solely on a single v3-8 machine. Once I attempted to create a v2-8 machine, but I couldn’t as Google was throwing “The demand is way too high” errors repeatedly, so I wasn’t abusing their kindness anymore.

It was also my first time with Google cloud, so I’m very grateful for the detailed documentation, which guided me through setting up a new machine, connecting to it via SSH, creating a persistent disk and mounting it on Linux. You can check out the techno-wizardry details in my previous post Configuring new VM on Google Cloud TPU

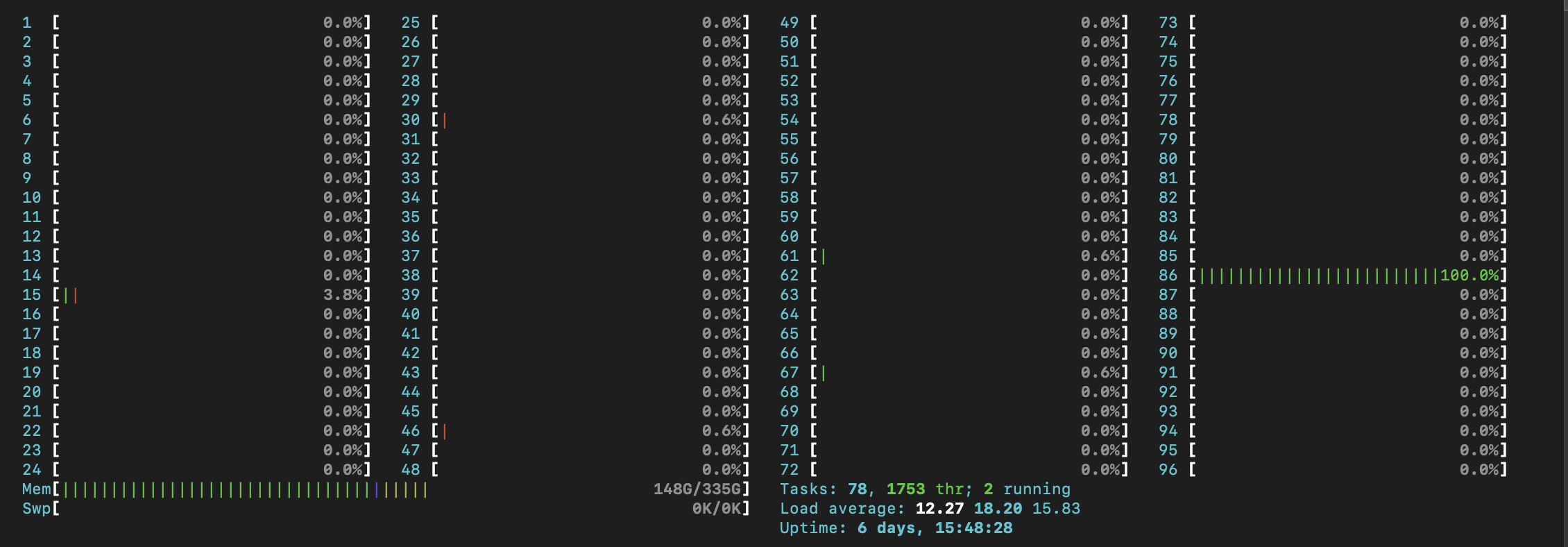

A screenshot showing the

htopdiagnostic tool from Google TPU v3-8. This bad boy has 96 CPU cores, with a total of 335 GB of RAM and 8 TPUs with 16GB High bandwidth Memory. Press F to pay respects.

Wow - v3-8 is such a powerful machine. I’m starting to feel like a real researcher now, so I’ve decreased the priority of my creating game-making factory task, as a new quest materialized: ‘Conquer Machine Learning Science’. I want to utilize every watt of this machine to learn, making sure not to waste a single minute. And so, my true journey began right here…

… To be continued.